The Code Leak Epidemic Nobody Wants to Talk About

The moment when trading proprietary code for AI suggestions became the new normal.

Do you remember when licences and intellectual property protection meant something, and a strong protection approach was proudly marketed? Just a few years ago, if a single repository slipped outside the firewall, careers ended. Companies bragged in RFP responses about secure servers, NDA-wrapped contractors, and “client code never leaves our vault” guarantees. Security teams protected intellectual property.

Then ChatGPT dropped.

Almost overnight, the same responsible developers who always use the company’s VPN, encrypt their disks, and lock their computers have now started copy-pasting proprietary algorithms into public web forms. Entire codebases from payment processors, trading engines, and medical device firmware now fly unprotected to servers in undisclosed locations, where they are processed by models whose terms of service explicitly state: “We may use your inputs to train future versions.”

And the scary part? Most people do not care.

When Things Went Wrong Even for Big Players

Real-world proofs have piled up faster than you’d think - verifiable scandals that should be blaring alarms in every boardroom. They represent a pattern, not isolated incidents, when devs were treating AI like a private notepad:

- Samsung: Engineers pasted proprietary semiconductor source code and confidential meeting notes into ChatGPT for debugging and summarization. Result? A company-wide ban and a mad scramble to assess the damage. Source

- Amazon: Staff fed internal code snippets into ChatGPT as a “coding assistant,” leading to outputs that eerily echoed company secrets. Lawyers issued urgent warnings, and the leaks were already out. Source

- JPMorgan Chase: Tech teams dumped proprietary trading algorithms into ChatGPT for quick analysis. The bank slapped a whole block on the tool across its network. Source

- Walmart: Developers entered supply chain optimization code and vendor docs for troubleshooting; outputs started hinting at leaked internal details. Source

- Healthcare sector: Multiple occurrences of pasted patient data and medical device firmware source code sparked debates about HIPAA compliance. Source

Cyberhaven tracked 6,352 daily attempts per 100,000 employees to paste corporate data (mostly source code) into ChatGPT. Source

By 2025, LayerX found 77% of employees had leaked something, often on personal accounts that bypass corporate controls. Source

The Data Land Grab in Plain Sight

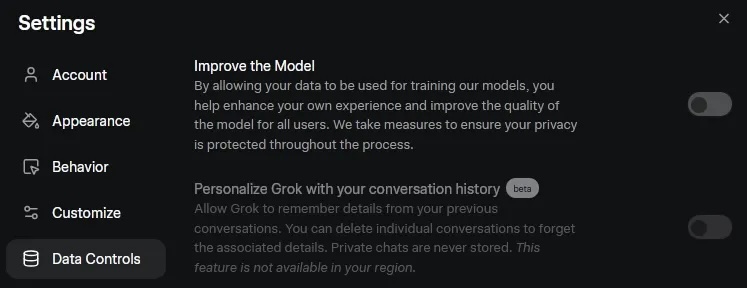

AI services often reserve the right to train on your inputs unless you pay a premium for “zero retention.” Typically, if you don’t pay, you are the product (in this case, the code). Free tiers are honey pots by design, and even the Pro ones are questionable. Remember when “we’ll never sell your data” turned into billion-dollar GDPR fines? Sometimes it just pays off. We are in the era of intense market competition, driven by corporate greed, where morality is sidelined.

Privacy Path: Cutting Through the Hype

If an AI service offers multiple Pro tiers, pay attention: only the highest one provides the desired privacy. Can you be entirely sure then? It’s up to you, but I doubt. It’s not that hard to get a sense of a service or AI model provider's past, or even to gather evidence from it. Is their communication transparent, honest, are they privacy-oriented? Is data the core of their business? Let’s be realistic, then.

For commercial services, I trust and like JetBrains (I use WebStorm, btw). AI code editor Supermaven looked solid too, and they’re joining Cursor now, where I trust the highest Enterprise licence with Privacy Mode enabled organization-wide.

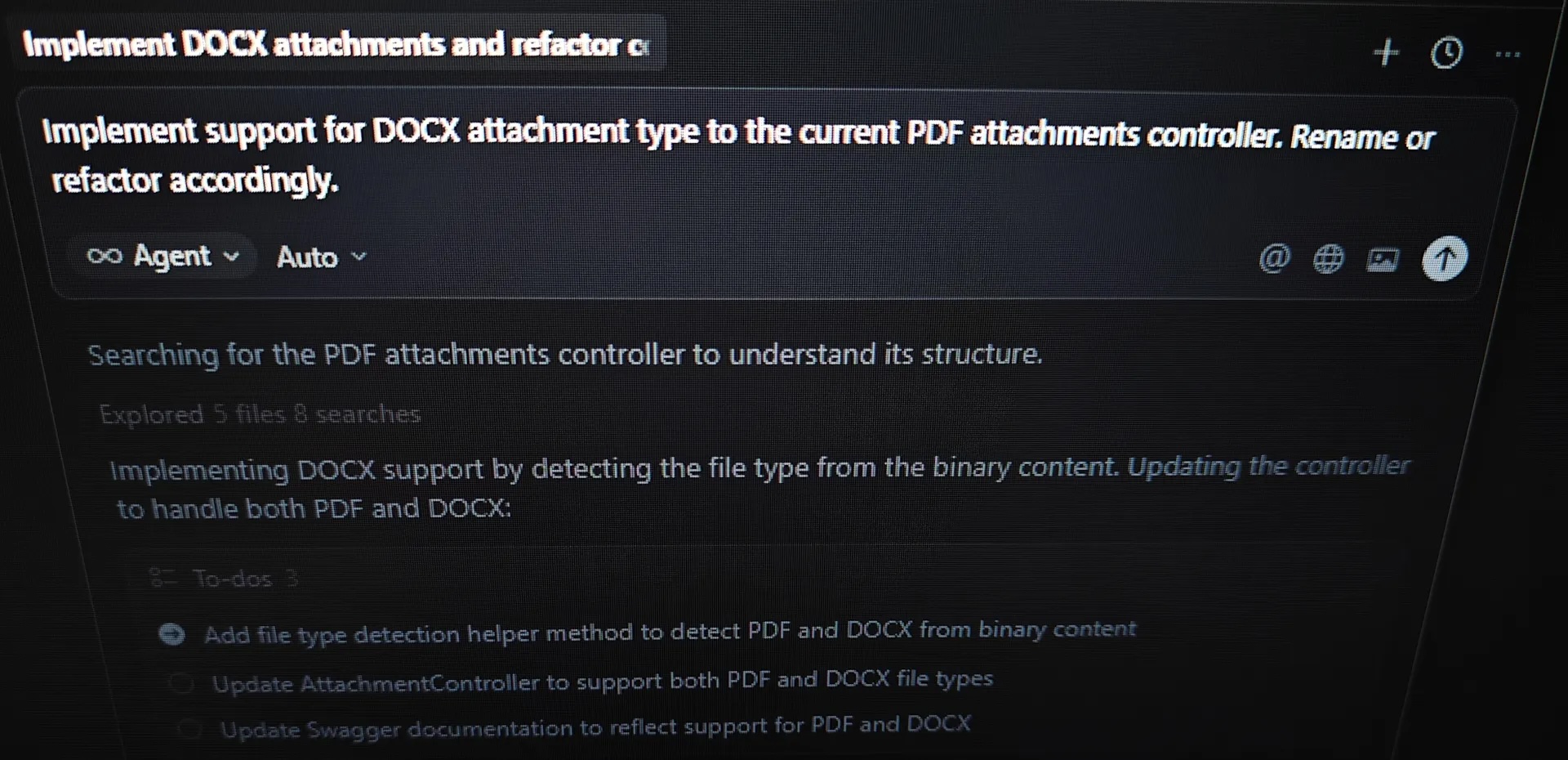

Running your own solution, or self-hosting an open-source one, is the only 100% way. Today, the response quality won’t match the closed-source SaaS, but it’s up to you what you are willing to trade. OpenCode and Forge code are pretty solid options for more complex agents. LM Studio is the best for conversational UI, model management, and local server. Last year, I even sniffed its network traffic locally just to be sure.

The Policies Are Coming - Eventually

Some organizations are waking up. New contract clauses are appearing:

- “Vendor agrees not to ingest client code into any trainable model.”

- “All AI tools must use zero-data-retention mode.”

- Enterprise plans with guarantees (at 10× the price)

Most companies, however, are still in the “move fast and pretend it’s fine” stage.

What Actually Responsible Looks Like (If Anyone Still Cares)

- Audit and map what your team’s actually putting into these tools and what tools are in use.

- Default-deny external AI assistants where feasible. In the age of home offices and personal laptops, total enforcement is a fantasy, but you can still educate, monitor, and make the safe option the path of least resistance.

- Pay for enterprise tiers with absolute zero-retention guarantees when you need cloud AI, set up a company-wide standard, and assign seats in your enterprise-licensed account to employees so they don’t need to find their own (less secure) ways.

- Use local setup for the sensitive stuff; models are good enough and not that hard to set up nowadays.

- Accept that “10× productivity” comes with a hidden 100× risk multiplier.

The Soul of the Craft

Code is distilled experience, hard-won domain knowledge, and competitive advantage. We used to guard it like family.

Now we feed it to trillion-dollar language models because autocomplete feels nice.

It’s progress, all right. Just not the kind any of us should be proud of.

The tools aren’t evil. Our collective amnesia is.

And once your code is baked into the next model release, there’s no git revert in the real world.